Batch #2 Applications Closed!

Applications now open

Co-Founder Matching Recap

Last night, we hosted a co-founder matching session as part of the AI safety founders community. 83 founders signed up to take part in a 2 hour session to mix and match, hopefully leading to many more exciting startups taking flight.

We started with a presentation to the topic of AI safety founding by Finn, Seldon's co-founder, along with introductions by Ashyae Singh and Esben Kran.

We had the amazing founders of Workshop Labs and Andon Labs come by to give short introductions to their companies and interact with the aspiring AI safety founders.

With representation across topics, such as senior engineers at FAANG, web3 founders, and ML researchers, the room was full of energy and discussion and we look forward to the next one.

Thanks to everyone making it an amazing night!

Building a Future ready for Advanced AI

At Seldon, we believe that for-profit companies can shape AI safety and we're here to prove it.

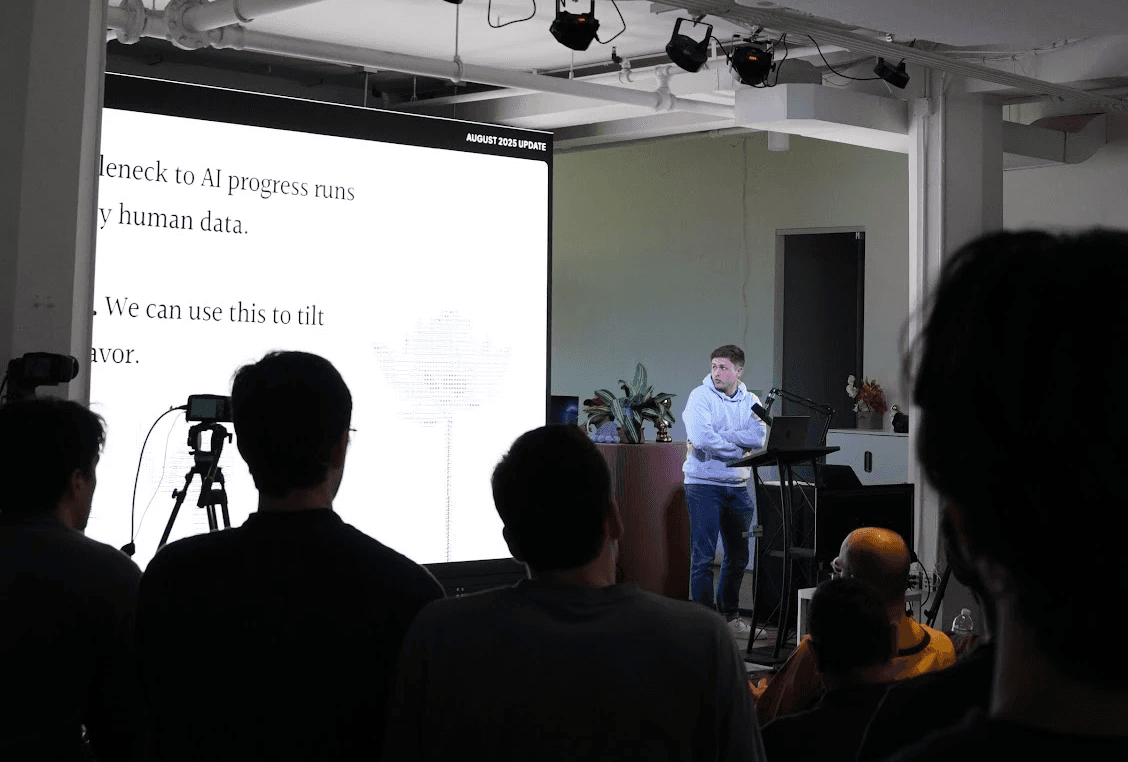

Last Friday, we closed off our pilot program with the Seldon Grand Finale, an event with a keynote from Esben Kran, a demo by each of our four startups, and a panel of leaders in AI safety entrepreneurship.

Our pilot program has been three amazing months with amazing founders who have now raised over $10m, sold security to xAI and Anthropic, and have patented new inventions for verifiable compute.

All of this while converting to Public Benefit Corporations, discussing the future of humanity, and having dinners with leaders in this new field and inspiring each other.

During the event, we had interesting demos by all of the founders:

Kristian presented Lucid Computing's exciting new direction and the importance of understanding where GPUs are and what they are processing.

Luke presented Workshop Labs' plan for solving the disparity of white collar workers in a post-AGI economy

Lukas presented Andon Labs' most recent experiments at xAI, YCombinator, and Anthropic letting chatbots run real-world businesses, such as vending machines

DeepResponse is still in stealth mode

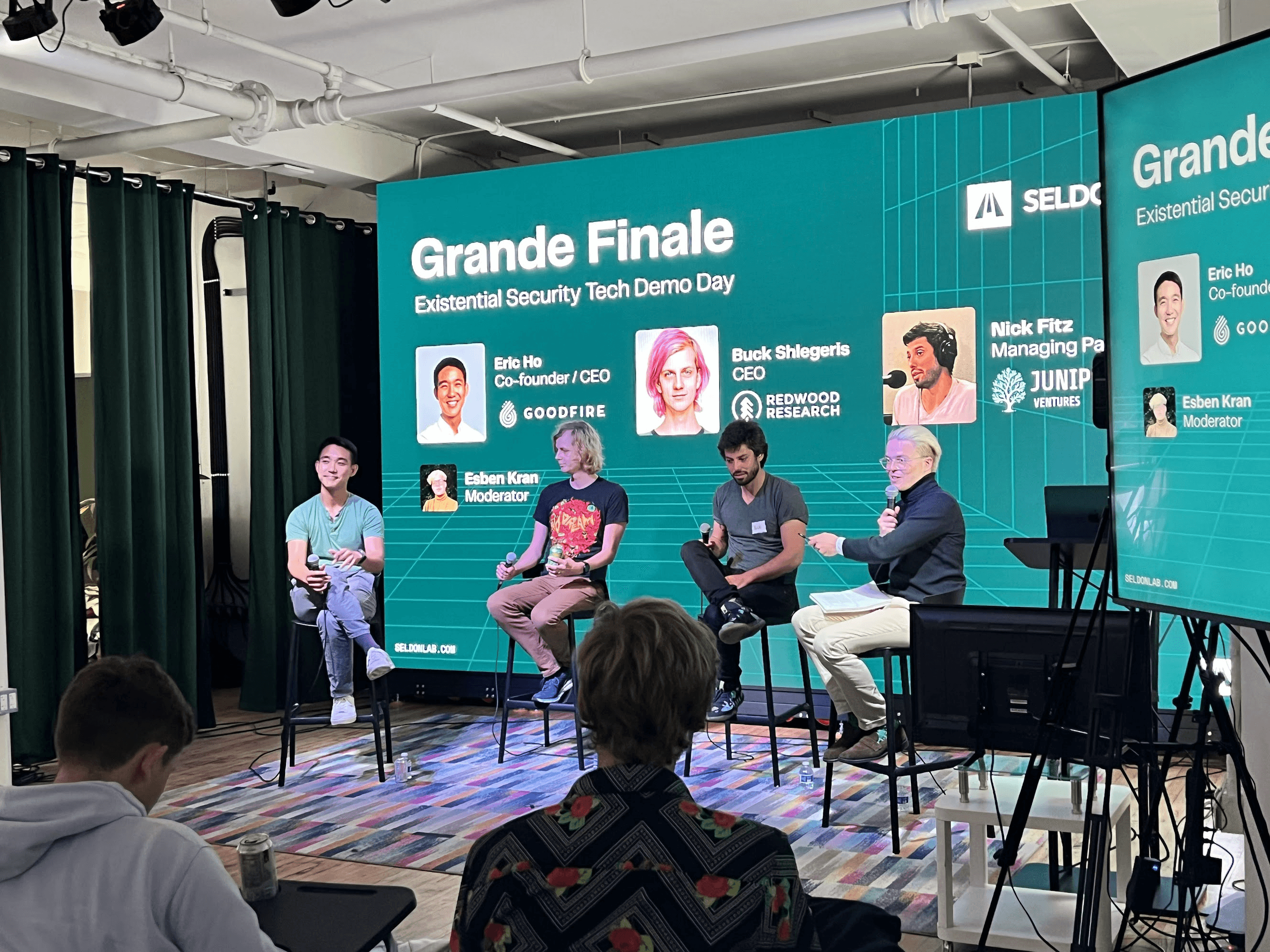

After the fantastic demos, we hosted a panel with the amazing Eric Ho, CEO of Goodfire, Buck Shlegeris, CEO of Redwood Research, and Nick Fitz, co-founder of Juniper Ventures, discussing some of the most important questions as we enter the AGI end game.

The questions discussed included when people expected to see mass destruction due to AGI, how startups can impact frontier development of AI, and how you can shape companies to create large-scale, blitzscaling impact on the world.

In general, the diversity of the investors, founders, and researchers that came for our event is a testimony to the new path we're shaping at Seldon in for-profit AI safety.

We had ample time to mingle, in addition to our late-night afterparty, and many came up and asked where they could apply, when we'd have our next program, and how they can contribute to this field.

As Seldon, it's our commitment to make this field a success. We're excited to continue nurturing this growing network of startups and collaborators.

Building the infrastructure that secures humanity's future is everyone's responsibility, and we've learnt that if we don't do it, no one will.

Become a part of Seldon's ambitious network by applying to our next batch later this year!

Dec 9, 2025

Dec 9, 2025

Dec 9, 2025

Dec 9, 2025

Read More

Read More

Why Technical AI Safety Is The Only Path to AGI Governance

What does the Big Red Button do?

In the last year, technical AI safety work has seen a dip in interest in favor of governance, demonstrations, and evaluations. While these efforts matter, they're putting the cart before the horse.

Today, I'll share the strong argument for technical AI safety: Without the technology to secure AI systems, governance will be impossible.

The Inconvenient Truth About AI Governance

Imagine tomorrow, the US President and Chinese Premier shake hands on a historic AI safety treaty. They agree to halt all dangerous AI development. They even install a "Big Red Button" to enforce it.

But what exactly would that button do?

By default, it would have to bomb every data center outside treaty zones. Because without verifiable auditing mechanisms, we can't verify compliance. And if we lack verified compute, the button might need to destroy treaty-compliant data centers too, just to be sure.

It's mutually assured destruction with extra steps.

Why Technical Solutions Create Their Own Adoption

History shows us that when technical solutions work, adoption follows naturally:

The Climate Parallel

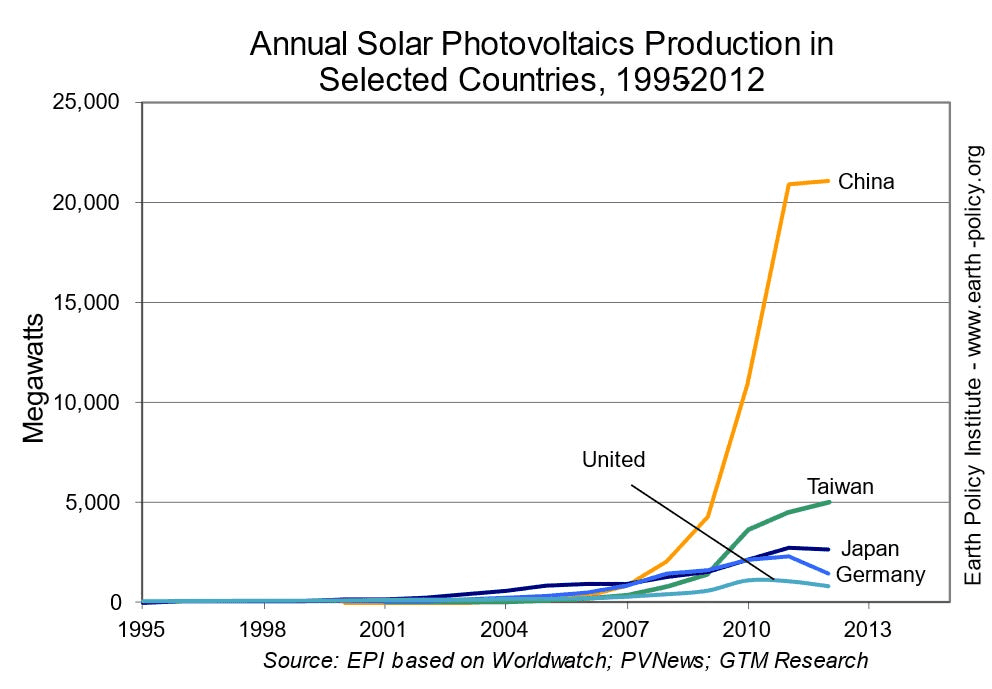

Look at Chinese solar panel installations over the last decade. Once the technology became cost-effective, deployment exploded—no global treaty required. Technical progress created economic incentives that aligned with climate goals.

The Security Precedent

On our side of the fence, Cloudflare now protects over 20% of the internet. Not because of regulations mandating DDoS protection, but because the technology is so effective and accessible that using it became the obvious choice. And if the Big Red Button needs technology that Cloudflare provides (such as AI labyrinths), Cloudflare can provide as a result of their for-profit scale.

The Path Forward: Infrastructure Before Governance

At Seldon, we're building the technical infrastructure that makes AI safety not just possible, but default. Here's what real governance-enabling technology looks like:

Hardware-level guarantees (like our portfolio company Lucid Computing's remote attestation)

Automated real-world incidence systems (Andon Labs' physical agent evaluations for Anthropic and xAI)

Real-time monitoring and response (Deep Response's incredible speed-up of detection and response)

Cryptographic verification (Workshop Labs' verifiably private data and model pipeline)

The Strong Case

The strong case for technical AI safety isn't that governance doesn't matter. It's that governance without technical solutions is merely theater.

When we have:

Reliable auditing mechanisms deployed in every data center

Hardware-level security that can't be bypassed

Automated systems that detect and prevent dangerous capabilities

Secure proofing of model behavior

Then, and only then, can governance actually govern.

The alternative is a world where our only enforcement mechanism is destruction, where verification requires devastation, and where the Big Red Button is just another name for giving up.

Technical AI safety isn't just about building aligned AGI or adding another layer of security.

It's the foundation that makes all governance possible.

And at Seldon, we're not going to wait for the perfect policies. We want you to build the technologies that make these policies enforceable. Because when the leaders of the world finally agree on AI safety, we want their Big Red Button to do something more sophisticated than "destroy everything and hope for the best".

The future of AI governance runs through technical innovation. Everything else is just politics.

Aug 14, 2025

Aug 14, 2025

Aug 14, 2025

Aug 14, 2025

Read More

Read More

Announcing the Seldon Summer 2025 Batch

We're thrilled to introduce Seldon’s Act 1: Accelerated Technology Companies, our trail-blazing pilot batch bringing together world-class AI safety and assurance startups. This milestone accelerates our vision of building the infrastructure that ensures humanity's long-term safety as AGI reshapes our world.

Seldon's First Act

What is Act 1?

It’s our inaugural cohort—founding teams dedicated to advancing AI safety. These startups benefit from intimate sessions with elite guests (e.g., Geoff Ralston, former YC president), hands-on support across all aspects of company building, and access to a global talent network.

Why it matters.

As AGI grows more pervasive, we face an increasing number of catastrophic risks. Through Act 1, we're scaling technological safety in tandem—because solving tomorrow’s threats requires building a robust sociotechnical infrastructure today. If there are a hundred existential challenges ahead, we need a hundred decacorn startups to address them.

Meet The First Four Companies

Andon Labs

Building safe autonomous organizations. AI-run systems operating independently from human intervention, while ensuring alignment and safety in real-world deployments.

Lucid Computing

Offering a hardware-rooted, zero-trust platform that cryptographically verifies AI chip usage and data processing location, ensuring regulatory compliance and reducing overhead in sensitive environments.

Workshop Labs

Providing personal sovereignty of AI models by training billions of models, one for each person, and ensuring ownership stays in the hands of the professionals that provide the training data.

DeepResponse

Deploying autonomous cyber defense operations for detection and response in the AGI era.

Meet Them This Friday

This Friday, August 15th, we are hosting an exclusive event to meet our founders, along with the CEO of Goodfire, Eric Ho, and the CEO of Redwood Research, Buck Shlegeris, among many other invited guests.

We're excited to see you there!

Godspeed

Aug 13, 2025

Aug 13, 2025

Aug 13, 2025

Aug 13, 2025

Read More

Read More

AGI Security: Rethinking Society's Infrastructure

AGI security is the proactive security-first infrastructure for a world where AI systems can reason, adapt, and act autonomously across digital and physical domains.

Specifically, it posits that:

Powerful AI will by default destroy our social, technological, and civilizational infrastructure due to the speed at which it is being developed.

Attacks become the default, not an infrequent occurrence, due to the fall in the price of intelligence and the proliferation of powerful AI.

Attack surfaces expand from the digital to physical, cognitive, and institutional through for example drones, mass media, and information control.

As a result, we need to rethink how we construct our societal infrastructure. We need to go beyond ‘adding a security layer’ to embedding security into the very foundations of anything we build.

Without this, the threats of powerful AI (such as Sentware, loss-of-control, societal disruption, and civilization-scale manipulation) will easily penetrate our weak security posture for whoever wishes to destabilize or gain control.

This post was originally published on Esben's blog.

Call For Technologies (CFT)

However, if we act today, we can be the ones to shape this field. These are but a few ideas for what we need to create today to lay the foundation of tomorrow:

Model Security and Control

Runtime containment: Hardware/software boundaries preventing model breakout and access control

Capability capping: Cryptographic limits requiring multi-party authorization vs single-party company control

Behavioral attestation: Continuous (inference-time) alignment verification vs current pre-deployment adhoc evaluation

Example: NVIDIA H100 Confidential Computing (<5% overhead)

Infrastructure Hardening

Supply chain verification: Privacy-preserving component tracking from manufacture to deployment

Air-gap capabilities: Millisecond physical isolation and shutdown

Redundant sovereignty: Critical systems across geopolitical boundaries

Attack-resilient design: Run-time monitoring and forensics assuming constant breaches

Societal Autonomy Protection

Manipulation detection: Identifying influence vs information

Authenticity verification: Human vs AI-generated content

Cognitive firewalls: Filtering AI access to human attention

Autonomy audits: Measuring for reductions of human autonomy

Deployment Infrastructure

Silicon-level security: Compute use attestation and auditing

Multilayer identification systems: Human / AI / hybrid

Capability attestation and capability-based permissions: Enforcement of tool-AI level control

Rollback capabilities: Logging at every step with enforcement of zero destructive changes

Examples

Hardware and compute infrastructure: Location verification

AI network protocol defenses: Agent labyrinths

Secure AGI deployment systems: Evaluation

Verification-as-a-service: Content moderation

Tool-AI for security: Digital forensics

Provably secure systems: Safeguarded AI

Scale, Scale, Scale

One of the most important factors in AGI security is whether we can reach the scale necessary within the allotted time.

I definitely believe we can and the evidence is in. We’re already at over $50M value in Seldon, our AGI security lab program, and 10x’d our investment in Goodfire with Juniper Ventures.

For founders, this means to have ambition for unicorn growth within 12 months. For investors, it means radical levels of investment in the earliest stages of this industry that will transform our society during the next years.

AGI Security

This is the most exciting opportunity in our lifetime. We stand at the precipice of a new world and we can be the ones to shape it.

The core of what makes us human will need guardians and there’s no one else but you to step up.

Pioneering startups are already running full speed ahead.

Join them!

Jun 16, 2025

Jun 16, 2025

Jun 16, 2025

Jun 16, 2025

Read More

Read More