Why Technical AI Safety Is The Only Path to AGI Governance

What does the Big Red Button do?

In the last year, technical AI safety work has seen a dip in interest in favor of governance, demonstrations, and evaluations. While these efforts matter, they're putting the cart before the horse.

Today, I'll share the strong argument for technical AI safety: Without the technology to secure AI systems, governance will be impossible.

The Inconvenient Truth About AI Governance

Imagine tomorrow, the US President and Chinese Premier shake hands on a historic AI safety treaty. They agree to halt all dangerous AI development. They even install a "Big Red Button" to enforce it.

But what exactly would that button do?

By default, it would have to bomb every data center outside treaty zones. Because without verifiable auditing mechanisms, we can't verify compliance. And if we lack verified compute, the button might need to destroy treaty-compliant data centers too, just to be sure.

It's mutually assured destruction with extra steps.

Why Technical Solutions Create Their Own Adoption

History shows us that when technical solutions work, adoption follows naturally:

The Climate Parallel

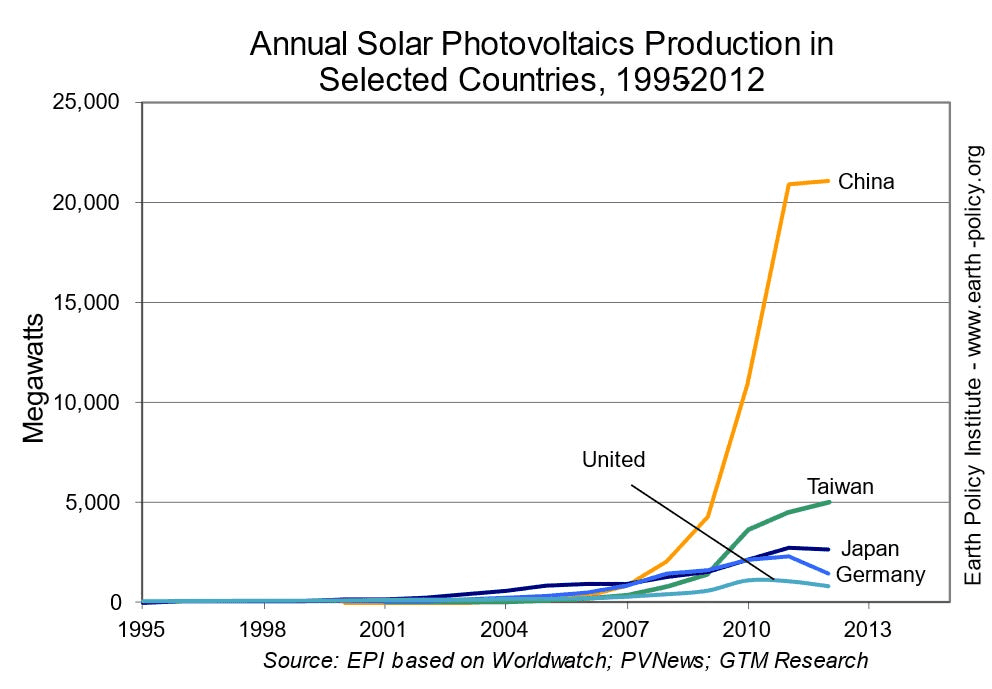

Look at Chinese solar panel installations over the last decade. Once the technology became cost-effective, deployment exploded—no global treaty required. Technical progress created economic incentives that aligned with climate goals.

The Security Precedent

On our side of the fence, Cloudflare now protects over 20% of the internet. Not because of regulations mandating DDoS protection, but because the technology is so effective and accessible that using it became the obvious choice. And if the Big Red Button needs technology that Cloudflare provides (such as AI labyrinths), Cloudflare can provide as a result of their for-profit scale.

The Path Forward: Infrastructure Before Governance

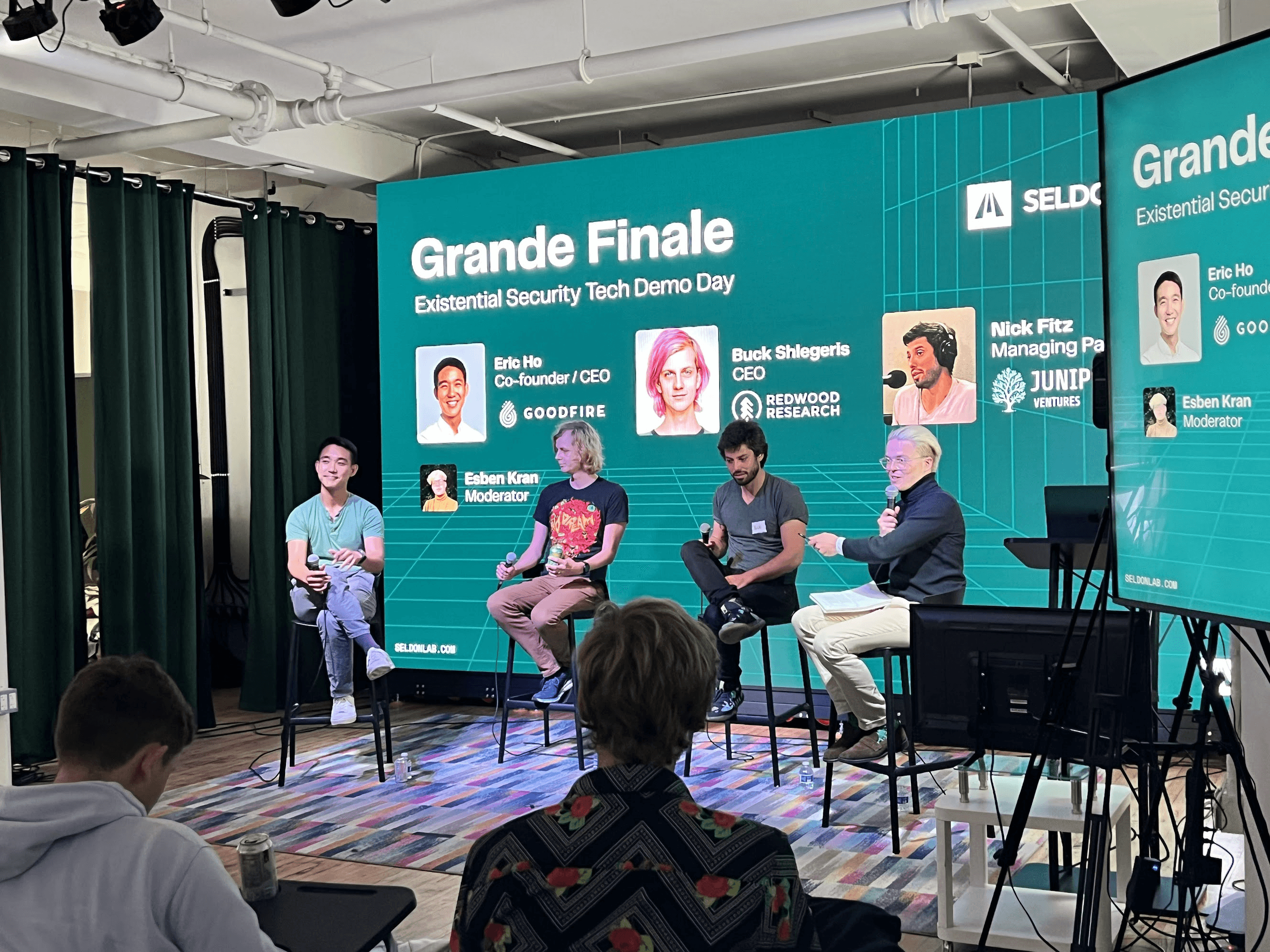

At Seldon, we're building the technical infrastructure that makes AI safety not just possible, but default. Here's what real governance-enabling technology looks like:

Hardware-level guarantees (like our portfolio company Lucid Computing's remote attestation)

Automated real-world incidence systems (Andon Labs' physical agent evaluations for Anthropic and xAI)

Real-time monitoring and response (Deep Response's incredible speed-up of detection and response)

Cryptographic verification (Workshop Labs' verifiably private data and model pipeline)

The Strong Case

The strong case for technical AI safety isn't that governance doesn't matter. It's that governance without technical solutions is merely theater.

When we have:

Reliable auditing mechanisms deployed in every data center

Hardware-level security that can't be bypassed

Automated systems that detect and prevent dangerous capabilities

Secure proofing of model behavior

Then, and only then, can governance actually govern.

The alternative is a world where our only enforcement mechanism is destruction, where verification requires devastation, and where the Big Red Button is just another name for giving up.

Technical AI safety isn't just about building aligned AGI or adding another layer of security.

It's the foundation that makes all governance possible.

And at Seldon, we're not going to wait for the perfect policies. We want you to build the technologies that make these policies enforceable. Because when the leaders of the world finally agree on AI safety, we want their Big Red Button to do something more sophisticated than "destroy everything and hope for the best".

The future of AI governance runs through technical innovation. Everything else is just politics.